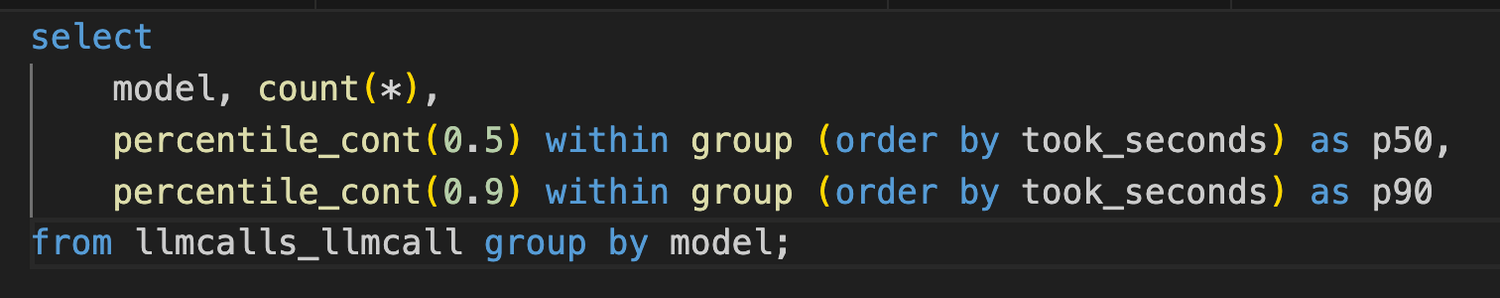

"I recently implemented a feature here on my own blog that uses OpenAI's GPT to help me correct spelling and punctuation in posted blog comments. Because I was curious, and because the scale is so small, I take the same prompt and fire it off three times. The pseudo code looks like this: for model in ("gpt-5", "gpt-5-mini", "gpt-5-nano"): response = completion( model=model, api_key=settings.OPENAI_API_KEY, messages=messages, ) record_response(response)"

"The price difference is large. That's easy to measure it's on their pricing page. But the speed difference is fairly large. I measure how long the whole thing takes and now I can calculate the median (P50) and the 90th percentile (P90) and the results currently are: model | p50 | p90 ------------+--------------------+------------------- gpt-5 | 27.34671401977539 | 43.84699220657348 gpt-5-mini | 9.814127802848816 | 16.00238153934479 gpt-5-nano | 24.380277633666992 | 32.99455285072327"

A blog feature sends identical prompts to three models—gpt-5, gpt-5-mini, and gpt-5-nano—and records the responses for comparison. Pricing differences between the models are large and easily checked. Response quality requires subjective judgment and ongoing comparison. Measured latency statistics (seconds) show median (P50) and 90th-percentile (P90) values: gpt-5 P50 27.3467, P90 43.8470; gpt-5-mini P50 9.8141, P90 16.0024; gpt-5-nano P50 24.3803, P90 32.9946. The test uses a small sample (about 40 data points) and suggests potential for tuning; measured durations indicate gpt-5-mini is fastest by a wide margin in this sample.

Read at Peterbe

Unable to calculate read time

Collection

[

|

...

]