"The Interpretable CrossExamination Technique (ICE-T) addresses the limitations of zero-shot learning by transforming qualitative data into quantifiable metrics, enhancing binary classification performance."

"ICE-T consistently improves performance over standard zero-shot baselines, particularly showcasing its utility in clinical applications where model interpretability is essential for decision-making."

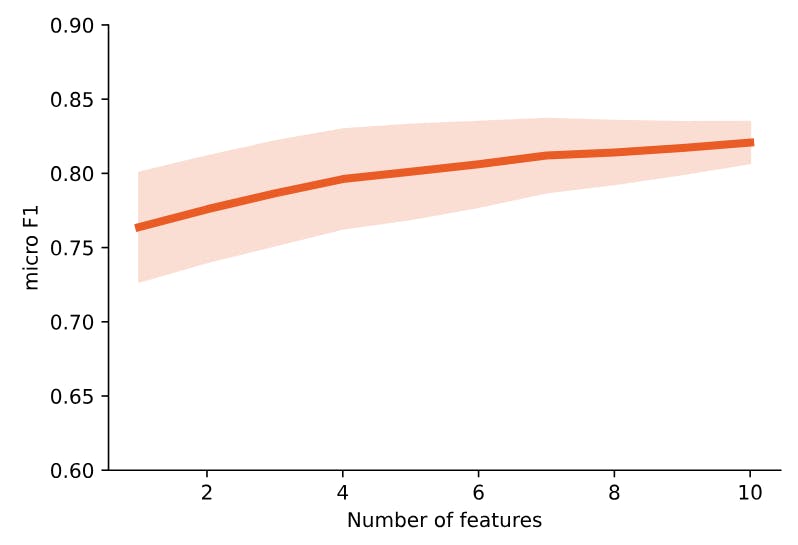

This article presents the Interpretable CrossExamination Technique (ICE-T), an innovative prompting method that merges large language model (LLM) responses with traditional classifiers. The technique specifically aims to improve binary classification tasks by navigating the limitations of zero-shot and few-shot learning. ICE-T utilizes a structured multi-prompt framework that quantifies qualitative data, thus facilitating more effective classification. Results indicate ICE-T outperforms conventional zero-shot methods across various datasets and metrics, especially in contexts where understanding model decisions is critical, proposing a shift towards automated and accessible AI systems for broader user engagement.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]