""In the Header component, the Submit button should be bigger." But what if there are multiple Submit buttons? What if styles are inherited? What if the component is reused elsewhere? The core issue is simple: There is no reliable bridge between what the developer sees in the browser and where that element lives in the codebase. Humans think visually. AI agents operate structurally. Without a mapping between those worlds, iteration becomes inefficient and token-expensive."

"When developers collaborate with AI agents on UI changes, they usually rely on one of two imperfect approaches. "Here's a screenshot. Make the button on the right darker." The AI sees pixels. It tries to infer which component produced them. It guesses based on layout, naming patterns, or proximity. Sometimes it's right. Sometimes it changes something adjacent. Sometimes it breaks styling entirely."

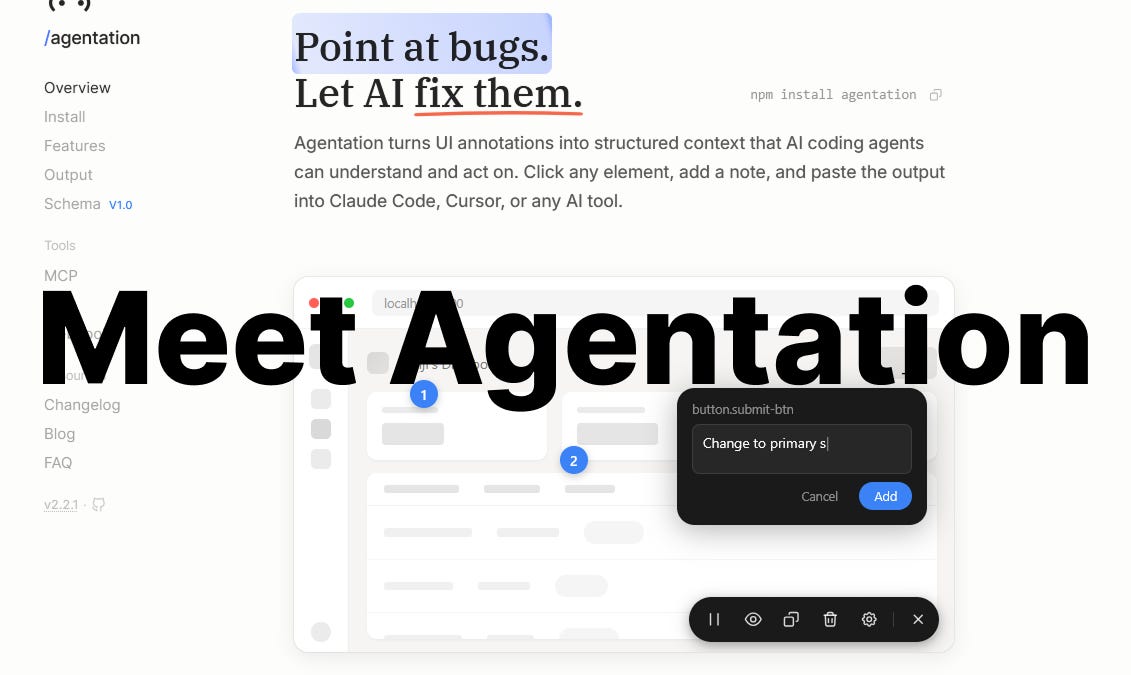

AI-assisted UI changes often fail because visual context in the browser does not map reliably to component code. Developers attempt pixel-based instructions or component-scope commands, but screenshots force the AI to infer structure and component names cause ambiguity when elements are reused or inherit styles. The mismatch makes edits error-prone and token-expensive. Agentation adds an annotation layer to React apps that links DOM elements to their code locations. Developers can enter annotation mode, click elements in the running app, and attach instructions that agents use to make precise edits. The mapping reduces miscommunication and speeds UI iteration in real-world workflows.

Read at Substack

Unable to calculate read time

Collection

[

|

...

]