#pretraining-data

#pretraining-data

[ follow ]

fromHackernoon

1 year agoAcross Metrics and Prompts, Frequent Concepts Outperform in Zero-Shot Learning | HackerNoon

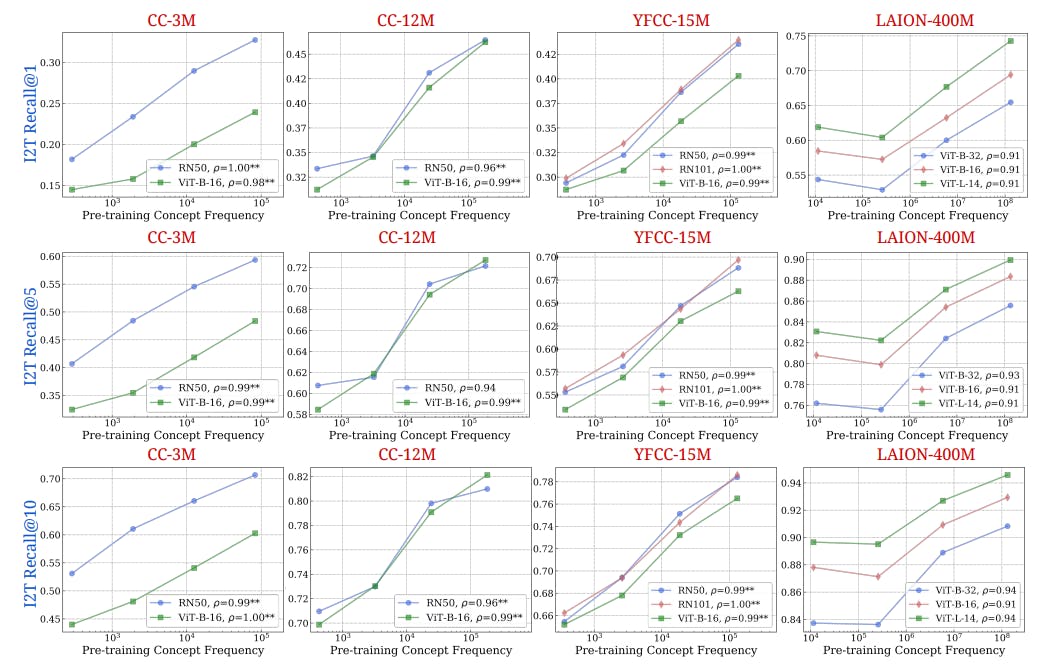

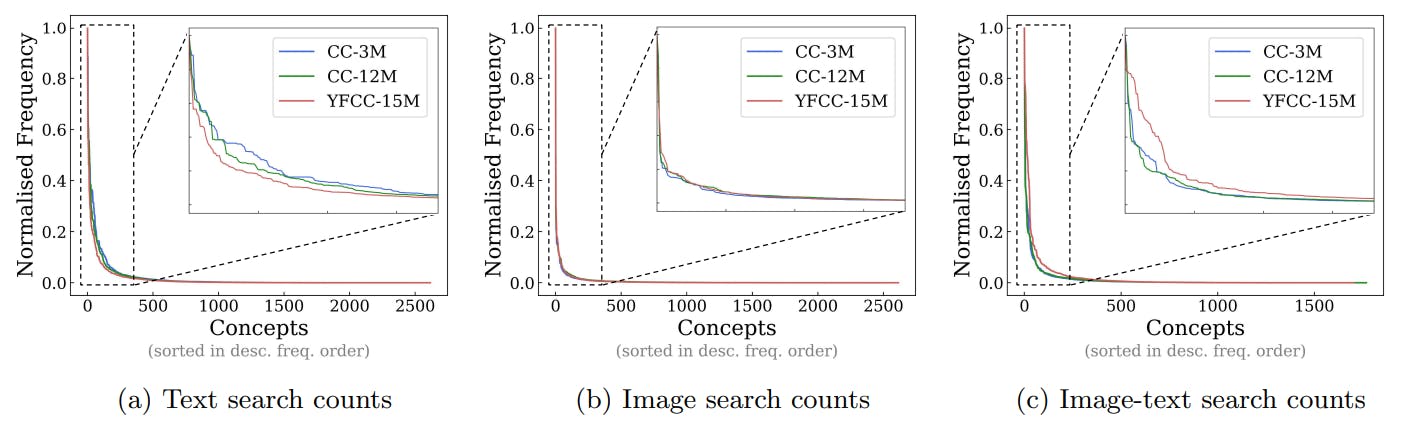

The strong log-linear trend between concept frequency and zero-shot performance consistently holds across different prompting strategies, indicating that more frequently encountered concepts in pretraining data yield better performance.

Artificial intelligence

[ Load more ]