""Wolfe emphasizes that while neural networks generalize within their training data, they struggle significantly with out-of-distribution examples, leading to failures in reasoning and outputs."},{"

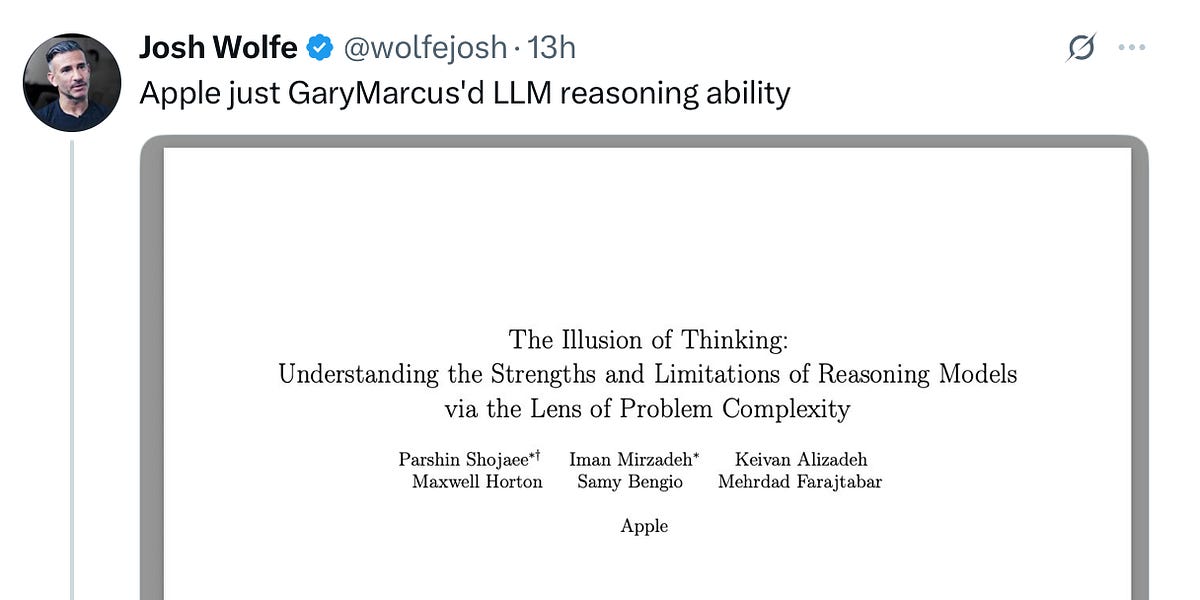

Josh Wolfe, a venture capitalist at Lux Capital, discusses a new Apple paper that casts doubt on the reliability of large language models (LLMs). Wolfe highlights a key argument that neural networks, while able to generalize within their training data, are prone to failures when faced with out-of-distribution scenarios. This has been a long-standing concern for Wolfe, who traces similar critiques back to his earlier work. The article also references Rao's findings that the reasoning of LLMs often misrepresents their cognitive processes, as users tend to anthropomorphize their responses in misleading ways.

Read at Substack

Unable to calculate read time

Collection

[

|

...

]