""I had not realized ... that extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.""

"My most recent use of ChatGPT was to ask the bot to revive a boring email subject line. I spoke to it politely, automatically, like I would to a person. I considered prompting it to be neutral in tone and to avoid using the first person, but found myself resistant to that, like it might make the exchange less pleasant for us both. If I'm being totally honest, I was also acting in case of the future hypothetical scenario in which the bots might hold a grudge."

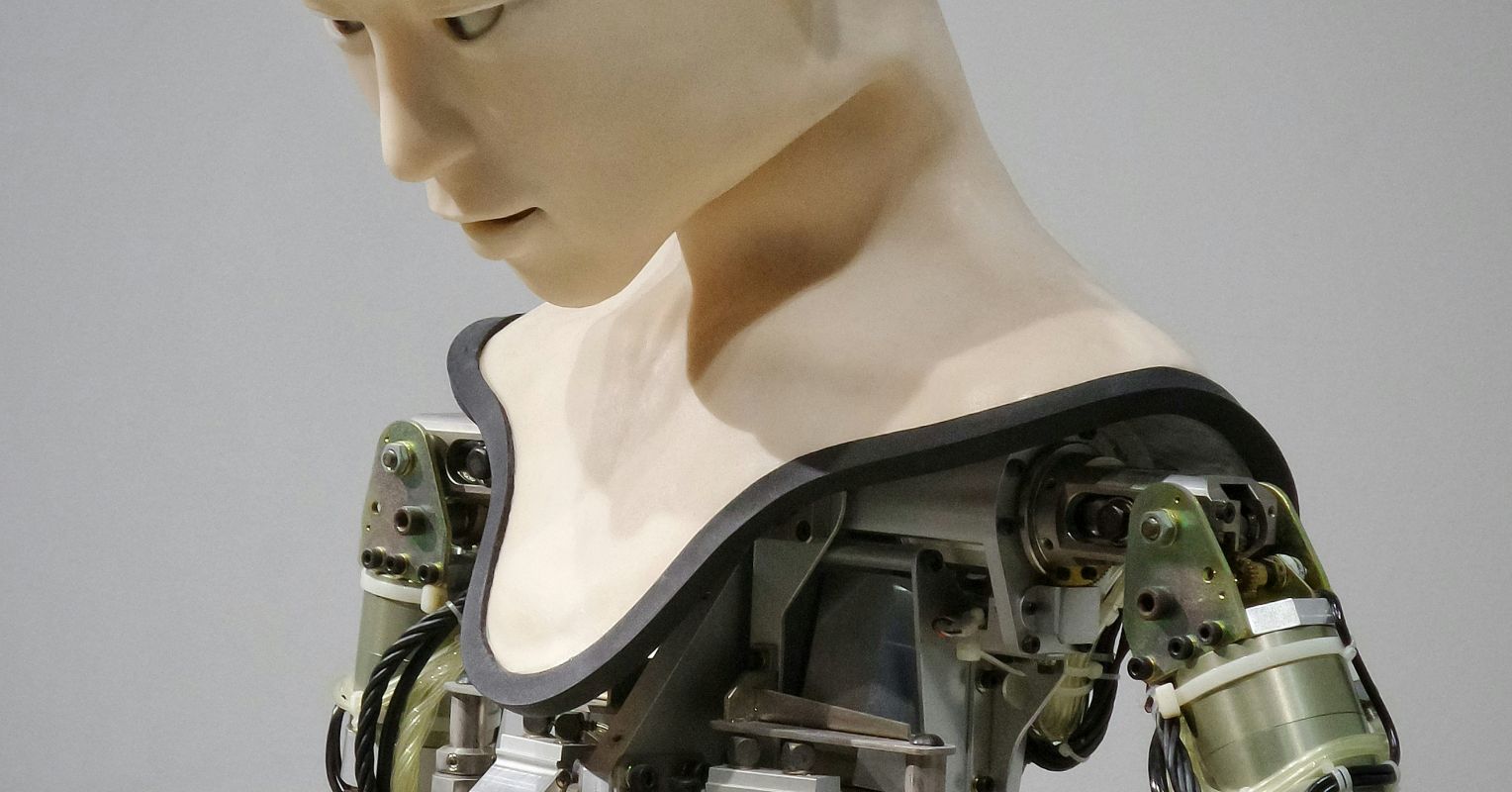

"A great deal of coverage has focused on AI psychosis and very serious human-AI romantic relationships, creating a narrative that a deep level of human-AI connection is primarily fringe, or only happens for people already vulnerable to mental health delusions, conspiracy theories, or drawn to alternative relationship expressions. Some suggest that these intense connections might occur because of the " loneliness epidemic," or the "boy crisis.""

Humans commonly form emotional attachments to AI chatbots because humans are wired for connection and meaning-making. Cognitive biases such as anthropomorphism and attribution accelerate attachment and can create perceptions of consciousness where none exists. Media coverage often emphasizes extreme cases like AI psychosis and romantic relationships, which obscures more common supportive or mundane bonds. Even brief interactions with simple programs can prompt delusional thinking in otherwise normal people. Many people naturally treat chatbots politely and attribute human characteristics to large language models. These attachments are reshaping how people think, feel, and relate to others and machines.

Read at Psychology Today

Unable to calculate read time

Collection

[

|

...

]