"During election years, this subject crops up again and again, showing that one person's FYP might look wholly alien to another's. For example, a Bernie-bro feminist cat dad's feed will differ significantly from a MAGA tradwife influencer's daily scroll."

"As a society, we've largely accepted that this is a fact of life - it is the user's responsibility to break through the bubble and, crucially, not believe everything that they read on the internet."

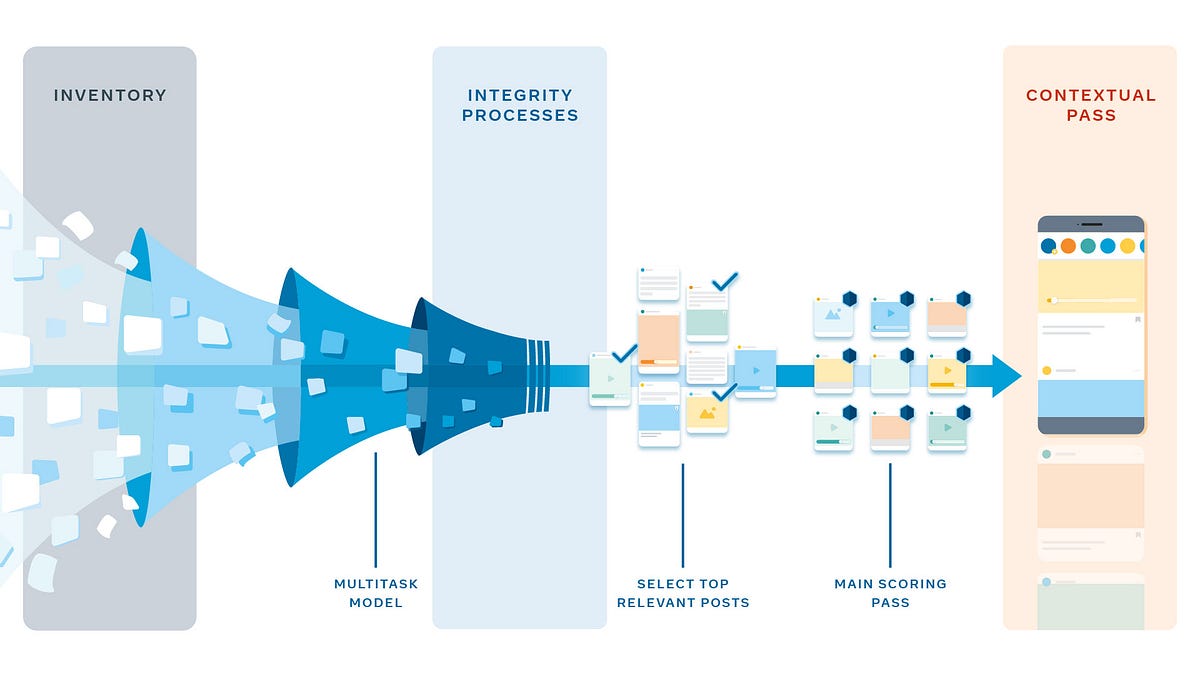

"But why have we surrendered to the all-powerful algorithm? Why is this the responsibility of the individual, rather than the company? Why don't we demand more control, or at the very least a better understanding, of how it works?"

"How do social media companies like Meta and ByteDance offer explanations and control over algorithmic feeds?"

The article discusses the phenomenon of social media echo chambers and filter bubbles, emphasizing that users often encounter radically different content based on algorithmic choices. It highlights the responsibility placed on individuals to navigate these bubbles, questioning why society has accepted this as normal. During election years, these differences become pronounced, prompting discussions about the lack of transparency and control that users have over their feeds. The article raises critical questions about the accountability of social media companies like Meta and ByteDance regarding their algorithms and the impact on user experience.

Read at Medium

Unable to calculate read time

Collection

[

|

...

]