#snowflake

#snowflake

[ follow ]

#cloud-computing #anthropic #enterprise-ai #data-warehousing #databricks #agentic-ai #data-governance

Business intelligence

fromFast Company

6 days agoA $200-million Snowflake-Anthropic partnership could be a game changer for enterprise AI

Snowflake and Anthropic are building a governed control plane that runs Claude models where enterprise data resides to enable secure, multistep AI without moving data.

from24/7 Wall St.

1 week agoAre Snowflake's Earnings the Latest Sign of the Coming AI Winter?

Snowflake ( ) released its third-quarter earnings yesterday, posting results that topped Wall Street estimates. Product revenue climbed 28% year-over-year to $1.21 billion, while adjusted earnings hit $0.35 per share, beating the consensus estimate of $0.31. Yet, the stock plunged 11% in midday trading today as investors fixated on the company's fourth-quarter guidance .

Business intelligence

fromInfoWorld

1 month agoDatabricks fires back at Snowflake with SQL-based AI document parsing

Databricks and Snowflake are at it again, and the battleground is now SQL-based document parsing. In an intensifying race to dominate enterprise AI workloads with agent-driven automation, Databricks has added SQL-based AI parsing capabilities to its Agent Bricks framework, just days after Snowflake introduced a similar ability inside its Intelligence platform. The new abilities from Snowflake and Databricks are designed to help enterprises analyze unstructured data, preferably using agent-automated SQL, backed by their individual existing technologies, such as Cortex AISQL and Databricks' AI Functions.

Artificial intelligence

fromInfoWorld

1 month agoSnowflake to acquire Datometry to bolster its automated migration tools

SnowConvert AI excels at static code conversion, but it still requires code extraction and re‑insertion. Hyper‑Q complements this with on‑the‑fly translation to tackle dynamic constructs and application‑embedded SQL that converters often miss,

Software development

fromBusiness Insider

1 month agoAn influencer's interview with Snowflake's CRO triggered an 8K filing

Data-storage company Snowflake filed an 8-K with the SEC on Monday after an executive spoke to an influencer who posts under the account name "theschoolofhardknockz" on Instagram and TikTok. Though the filing doesn't name the executive, he identifies himself as Chief Revenue Officer Mike Gannon in the video, which had more than 555,000 views on TikTok and nearly 138,000 likes on Instagram as of Wednesday afternoon.

Business

Artificial intelligence

from24/7 Wall St.

1 month agoSnowflake vs. Palantir: Buy, Sell, or Hold After Their Strong AI-Led Earnings?

Snowflake and Palantir's collaboration and strong results position them to accelerate enterprise AI adoption and benefit from continued partnership-driven industry growth.

fromTheregister

4 months agoSnowflake builds Spark clients for its own analytics engine

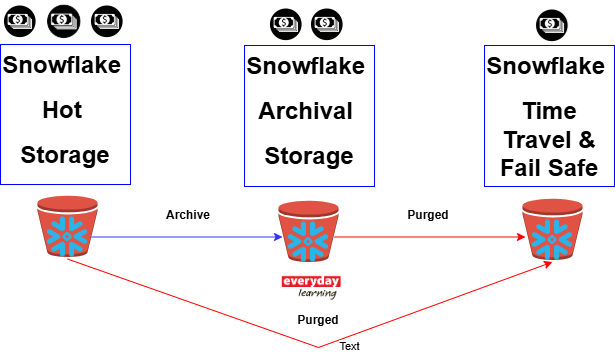

Customers have been using Spark for a long time to process data and get it ready for use in analytics or in AI. The burden of running in separate systems with different compute engines creates complexity in governance and infrastructure.

Data science

fromInfoWorld

6 months agoDatabricks Data + AI Summit 2025: Five takeaways for data professionals, developers

The addition of the managed PostgreSQL database to the Data Intelligence platform will allow developers to quickly build and deploy AI agents without having to concurrently scale compute and storage.

Business intelligence

Data science

fromTechzine Global

6 months agoSnowflake makes building AI apps and agents easier

Snowflake introduces new features to ease AI application development.

Agentic Products on Snowflake Marketplace enable direct use of AI without moving data.

External data integration is simplified for better AI application performance.

[ Load more ]