"Privacy-preserving data publishing is crucial for safe data sharing, utilizing formal models like k-anonymity to protect individual privacy against identifiable information in shared datasets."

"The research outlines various NLP approaches to maintain privacy while processing sensitive text data, emphasizing the importance of frameworks that ensure compliance with privacy regulations."

"Different strategies for text anonymization, including anonymization benchmarks and entity recognition, aim to balance data utility with privacy, creating a framework for future privacy-focused NLP applications."

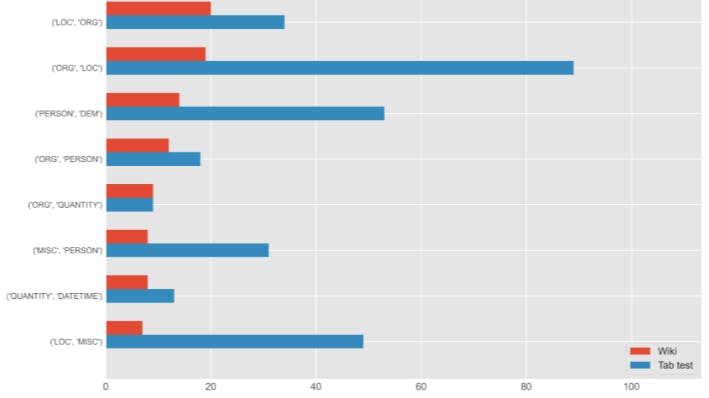

"The analysis of privacy risk indicators, with a focus on evaluation metrics and experimental results, reveals significant insights into ensuring the safety of shared data."

The article discusses advanced methods for privacy-preserving data publishing, particularly in the context of Natural Language Processing (NLP). It details various privacy models, including k-anonymity, and their adaptation for text data. The research focuses on anonymization techniques, entity recognition, and privacy risk indicators. Through an evaluation of different methodologies and experimental results, the findings highlight critical insights for balancing data utility and privacy. This work lays groundwork for future technologies in safeguarding privacy within shared datasets, particularly for sensitive information.

Read at Hackernoon

Unable to calculate read time

Collection

[

|

...

]