#data-processing

#data-processing

[ follow ]

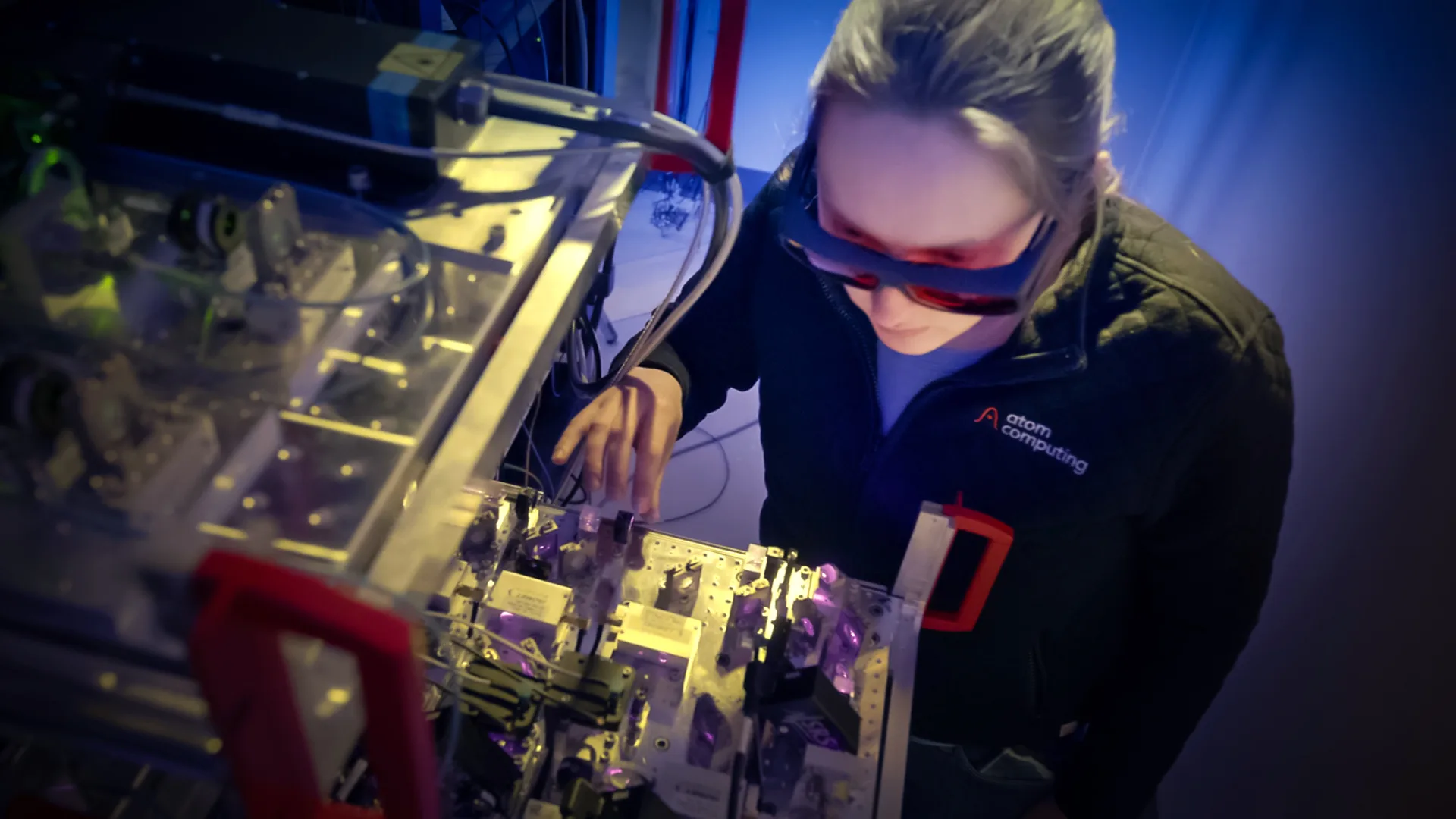

#artificial-intelligence #apache-spark #machine-learning #ai #quantum-computing #big-data #architecture

fromTheregister

2 months agoGoogle Workspace AI 'smart features' are on by default

Engineering YouTuber Dave Jones noticed this week that he had been opted into a set of new Workspace smart features without ever being asked. According to Google's help page for the features, the point of the on-by-default settings is to add its Gemini AI across Workspace in order to suck in all your Gmail, Calendar, Chat, Drive, and Meet data so that it can all be cross-referenced.

Privacy technologies

fromFast Company

5 months agoWhat if the future looks exactly like the past?

When Peter Drucker first met IBM CEO Thomas J. Watson in the 1930s, the legendary management thinker and journalist was somewhat baffled. "He began talking about something called data processing," Drucker recalled, "and it made absolutely no sense to me. I took it back and told my editor, and he said that Watson was a nut, and threw the interview away."

Business

fromChannelPro

5 months agoChannel Focus: All you need to know about Snowflake's partner program

The Snowflake Platform enhances enterprises' data management under an AI Data Cloud, focusing on self-managed services, governance, and visibility without requiring extensive end-user hardware.

Artificial intelligence

Artificial intelligence

fromMedium

8 months agoBuild Multi-Agentic AI Agents with AWS Bedrock from Scratch..

A multi-agent system is created to facilitate collaboration between different agents, specifically designed for handling user queries.

The agent named 'bedrock-supervisor-agent' is tasked with assessing and directing user questions related to accommodations or restaurants.

[ Load more ]