#human-ai-collaboration

#human-ai-collaboration

[ follow ]

#ai-agents #ai #decision-making #ai-assisted-design #generative-ai #ai-in-marketing #creativity #automation

fromTechCrunch

3 days agoHumans& thinks coordination is the next frontier for AI, and they're building a model to prove it | TechCrunch

Humans&, a new startup founded by alumni of Anthropic, Meta, OpenAI, xAI, and Google DeepMind, thinks closing that gap is the next major frontier for foundation models. The company this week raised a $480 million seed round to build a "central nervous system" for the human-plus-AI economy. The startup's " AI for empowering humans " framing has dominated early coverage, but the company's actual ambition is more novel: building a new foundation model architecture designed for social intelligence, not just information retrieval or code generation.

Startup companies

fromFortune

1 week agoI lead IBM Consulting, here's how AI-first companies must redesign work for growth | Fortune

Across every industry, organizations are investing heavily in the potential of artificial intelligence to reshape how they operate and grow. Nearly 80% of executives expect AI to significantly contribute to revenue by 2030, yet only 24% know where that revenue might come from. This isn't an awareness gap. It's an architecture gap. The companies already capturing AI's value aren't waiting to discover it through pilots and proofs-of-concept.

Artificial intelligence

fromNature

2 weeks agoAI can spark creativity - if we ask it how, not what, to think

When a scientist feeds a data set into a bot and says "give me hypotheses to test", they are asking the bot to be the creator, not a creative partner. Humans tend to defer to ideas produced by bots, assuming that the bot's knowledge exceeds their own. And, when they do, they end up exploring fewer avenues for possible solutions to their problem.

Artificial intelligence

fromSecuritymagazine

4 weeks agoHumans at the Center of AI Security

Whenever the conversation turns to AI's role in cybersecurity, one question inevitably surfaces - sometimes bluntly, sometimes between the lines: "If AI can spot patterns faster than I can, will it still need me?" It's a fair question - and one that reflects a deeper anxiety about the future of security careers. AI is everywhere now: embedded in email gateways, SOC workflows, identity systems, and cloud defenses. But here's the truth: AI isn't erasing security roles. It's reshaping them.

Artificial intelligence

fromFast Company

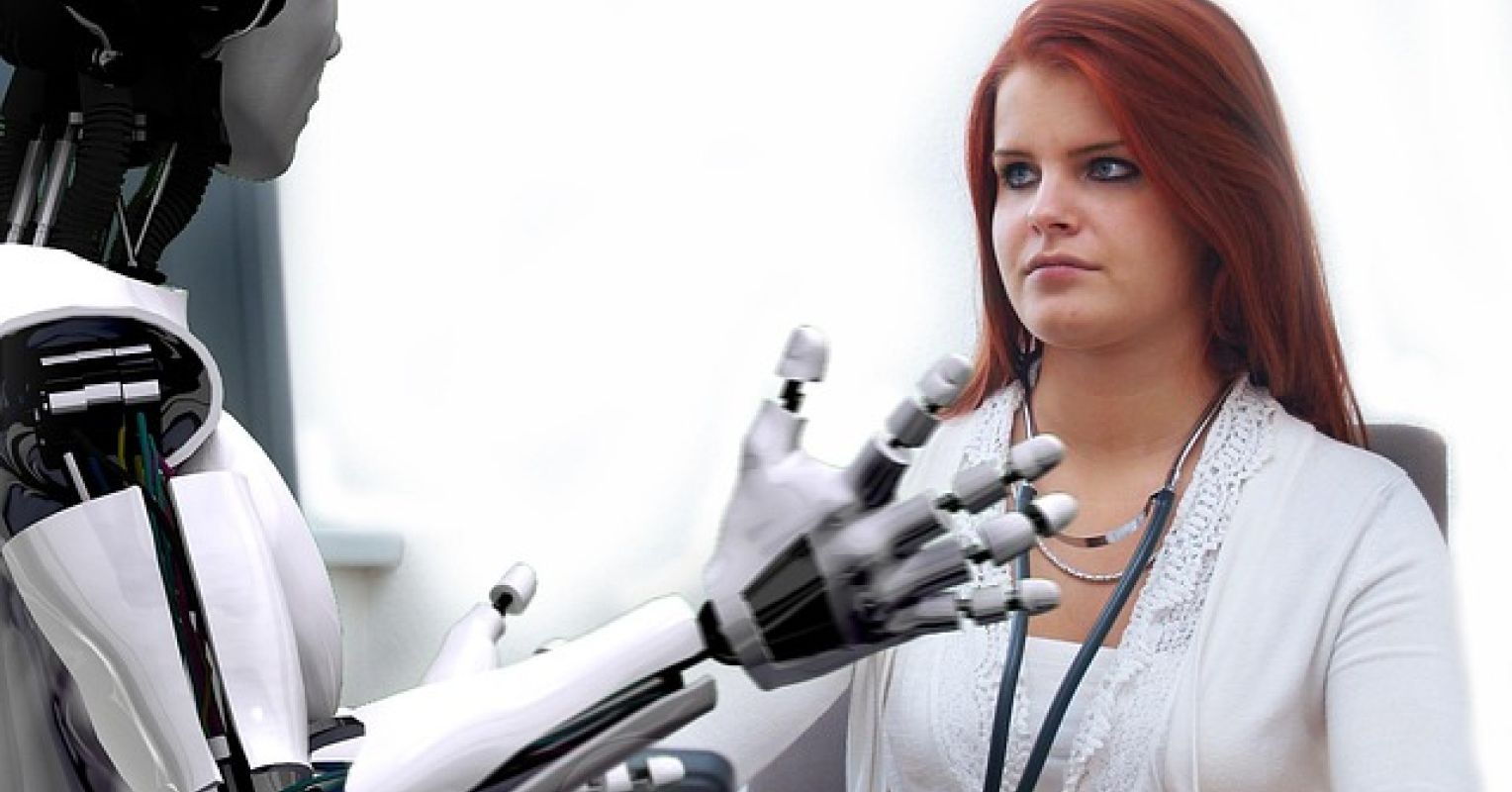

1 month agoThe future of work isn't man versus machine. It's man plus machine

There's no shortage of apocalyptic headlines about the future of work in the era of artificial intelligence. For workers, the technology has inflicted anxiety and uncertainty, provoking questions of when, how many, and which kinds of workers will be replaced. Companies have been propelled into a FOMO fury to integrate AI expediently or miss out on efficiency, cost savings, and competitive advantage. The disruption is inevitable, but from where I sit at the nexus of employee mental health and technology, we're asking the wrong questions.

Mindfulness

fromFast Company

1 month agoHow to transform AI from a tool into a partner

The conversation about AI in the workplace has been dominated by the simplistic narrative that machines will inevitably replace humans. But the organizations achieving real results with AI have moved past this framing entirely. They understand that the most valuable AI implementations are not about replacement but collaboration. The relationship between workers and AI systems is evolving through distinct stages, each with its own characteristics, opportunities, and risks. Understanding where your organization sits on this spectrum-and where it's headed-is essential for capturing AI's potential while avoiding its pitfalls.

Artificial intelligence

fromBusiness Insider

1 month agoThe CHRO's job is getting a whole lot bigger

AI is changing how companies hire, train, and lead, and in the process, the chief human resources officer's role is expanding. Today's top HR leaders are becoming AI strategists, helping their organizations navigate the next wave of workplace transformation. "The old model of HR was employees over here, technology over there," says Thomas Hutzschenreuter, a university professor at the Technical University of Munich (TUM). "But the new model of work is human-AI collaboration." AI is a coworker now, he says, and that means that "HR has a bigger mandate. They need to understand not just people and culture, but go deeper into the strategy, the business, and the technology itself."

Artificial intelligence

fromeLearning Industry

1 month agoeLearning Industry's Guest Author Article Showcase [November 2025]

From nurturing curiosity to harnessing cognitive science principles and designing learning for co-intelligence, November's Guest Author Article Showcase spotlights some excellent pieces on human-AI convergence. What happens when humans focus solely on technology when designing learning with Artificial Intelligence? Why do we need to teach and cultivate critical thinking? Can AI tools amplify our humanity? In no particular order, here are last month's top guest author articles on this hot topic.

Education

fromFast Company

1 month agoAI could transform the physical world. To do so, it will need human expertise

Humans are likewise crucial for Cephla, which is deploying AI-powered microscopes in life science research, drug discovery, and diagnostics, said Hongquan Li, cofounder and CEO of the biotech company. Starting with malaria detection, humans are collecting data for training, annotating images, and providing input on relevant clinical metrics, he said. "When those machines are deployed, humans operate those microscopes and interact with patients and make the critical clinical decisions," Li said.

Artificial intelligence

Artificial intelligence

fromFortune

2 months agoAI agents and robots can already automate over 57% of U.S. work hours, but that doesn't mean half of all jobs are endangered, McKinsey says | Fortune

AI can technically automate about 57% of U.S. work hours, but human skills and organizational redesign will shape collaboration rather than mass job loss.

fromMedium

2 months agoFrom Human Simulations to Work Companions: The Future of Human-AI Collaborative Intelligence

In the decades since natural language processing (NLP) first emerged as a research field, artificial intelligence has evolved from a linguistic curiosity into a catalyst reshaping how humans think, work, and create. Few people are as qualified to trace that journey, or to imagine what comes next, as Rada Mihalcea, Professor of Computer Science and Engineering and Director of the Michigan AI Lab at the University of Michigan.

Artificial intelligence

Artificial intelligence

fromFortune

2 months agoNow we know that AI won't take all of our jobs, Silicon Valley has to fix its fundamental mistake: Automation theater has to end | Fortune

AI should prioritize accountability, human-AI collaboration, and observable trustworthiness instead of optimizing for full autonomy.

fromMedium

3 months agoDesign prompt-building interfaces

One of the most persistent problems in AI products today is this: people still aren't sure what these systems are capable of, or how to get the best out of them. This is because most AI tools still greet users with a single blank box and a placeholder as vague as it is open-ended: "Ask anything." The result? Without clear guidance, users start crafting prompts in haste, iterate through endless revisions, lose control of the flow, and gradually pollute the context with fragmented instructions.

UX design

fromPsychology Today

3 months agoWhy AI and Human Thought Need to Stay Separate

Dialogue and learning thrive when two "minds" challenge each other from separate vantage points. Structural separation keeps collaboration alive, turning distance into the space where insight forms. In my earlier post on parallax cognition, I proposed that depth in thinking comes from contrast, not convergence. When two distinct perspectives-human and artificial-observe the same problem from different computational vantage points, something new emerges.

Artificial intelligence

Artificial intelligence

fromFortune

3 months agoGoldman's chief information officer has 4 tips on how to AI-proof your career, including 'posing provocative, non-obvious questions' | Fortune

Professionals should shift from task execution to orchestrating hybrid human-AI teams, asking provocative questions and coordinating AI to amplify creativity and outcomes.

fromFortune

3 months agoYour new teammate is a machine. Are you ready? | Fortune

Companies across various industries are investing heavily in AI to enhance employee productivity. A leader at the consulting firm McKinsey says he envisions an AI agent for every human employee. Soon, a factory manager will oversee a production line where human workers and intelligent robots seamlessly develop new products. A financial analyst will partner with an AI data analyst to uncover market trends. A surgeon will guide a robotic system with microscopic precision, while an AI teammate monitors the operation for potential complications.

Artificial intelligence

fromeLearning Industry

3 months agoAI Adoption: A Tale Of Three Organizations-And The L&D Leader's Opportunity

It's 2025, and AI is no longer knocking at our doors: It's already in the building. Our people are using AI for a wide range of daily tasks, the demand for candidates with AI skills is growing, and AI is itself an important tool in the recruiting process. Meanwhile, L&D and talent leaders have moved beyond outsourcing rote tasks to AI and are now engaging it as a strategic and creative partner.

Artificial intelligence

Artificial intelligence

fromThe Hacker News

3 months agoHow Leading Security Teams Blend AI + Human Workflows (Free Webinar)

Balanced workflows that intentionally combine human judgment, rules-based automation, and targeted AI produce explainable, reliable, and adaptable outcomes for security and operations.

fromMedium

4 months agoThe Art of Invisible AI: What Granola's 70% Retention Teaches Us About Product Design

Sarah closed her laptop after another marathon day of back-to-back meetings. She glanced at her phone and smiled, a notification from Granola had already transformed her scattered notes from six different calls into beautifully organized summaries. No awkward meeting bots had invaded her calls. No clunky interfaces demanded her attention. The AI had simply worked. Invisibly. Perfectly. This moment captures something profound happening in product design right now: the rise of invisible AI that augments rather than replaces human intelligence.

Artificial intelligence

fromThe Atlantic

5 months agoA Better Way to Think About AI

Sometimes it seems the most direct route is to automate wherever possible, and to keep iterating until we get it right. Here's why that would be a mistake: imperfect automation is not a first step toward perfect automation, anymore than jumping halfway across a canyon is a first step toward jumping the full distance. Recognizing that the rim is out of reach, we may find better alternatives to leaping-for example, building a bridge, hiking the trail, or driving around the perimeter.

Artificial intelligence

fromFortune

6 months agoSalesforce surpasses 1 million AI agent-customer conversations, says finance chief

Our support teams are able to focus on more complex customer questions. These are engagements that really require human judgment, creativity, empathy—all things that AI can't do.

Artificial intelligence

[ Load more ]

_2il14s5x.jpg)